Part 1 of Part 1: Telling Rewriting the LMS Story

Note for my semi-regular (not so much lately) readers: Apologies for my absence. I recently took a position with a new organization. The transition has soaked up all of my cycles but I think things are starting to normalize.

What would you build to enable access to structured learning opportunities?

If you were going to design a scalable system that enabled broad access to training opportunities across a large, geographically dispersed organization, what kind of system would you build? If you wanted to show an organization’s collective progress toward proficiency and readiness, what kind of system would you design? Chances are, many of the characteristics and features you would design into such a system would look similar to those offered in a typical LMS.

This piece isn’t meant to bash LMS products, but to question the premise and focus of this type of tool. LMS products are well intended by both the vendors that build them and the organizations that deploy them. Few enterprise systems focus on individual pursuits at such a granular level. LMS deployments *can* be a tremendous strategic asset. Features of LMS *can* deliver great value for every employee in the organization. Unfortunately, many (maybe most) LMS deployments… don’t.

The problem(s) with LMS deployments

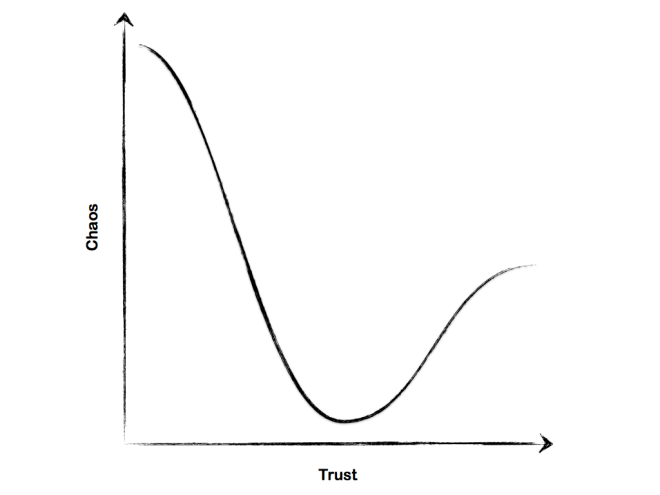

For many folks that use LMS, these tools are anathema. This perception could stem from a misguided notion (on the part of those that would purport to manage learning) that an internal biological process (learning) can be regulated by a loosely coupled external technological stimulus (management system.) Moreover, many of the most common LMS features apply more energy to providing for those that watch the system than providing for those that use it to improve their own performance. This imbalance describes much of the problem I have with the idea of LMS. Systems focus on launch or attend and track. They tend to exist for the benefit of the organization as watcher, not the person as experiencer.

What characteristics exemplify these problems?

Again, this isn’t necessarily a swipe at LMS vendors. Customers drive priorities and shape implementations. Without pointing fingers, here are 6 of my least favorite LMS characteristics expressed as pseudo-requirements. I think you’ll find at least one thing here that resonates with your own experience.

1. The system must provide an environment that makes it easy to unleash ideas that torture the relative few onto the rest of the organization.

The label LMS implies a certain paternal push mentality. We push things to our “learners” in a content monologue. At worst, this monologue is a poorly produced, unreliable, dysfunctional mess with manifestations tantamount to torture. At best, it’s often content on a conveyor belt.

When we make it easy to distribute information, we also make it easy to broadcast well-intended but poorly selected and designed messaging. Much of the e-learning we see hung on learning management systems creates shallow communication without creating deep conversation. Broad messaging deployment with low costs of entry can tend to create pollution in an already polluted stream. Sure, an LMS can be super-efficient…

“There is surely nothing quite so useless as doing with great efficiency what should not be done at all.” ~Peter Drucker

At some point, for better or worse, we all have an idea that we think will benefit the business. We don’t always consider whether or not we should, or how much it will actually matter. The LMS provides a platform to propagate ideas that compete for the attention of the participant. We don’t always coordinate these broadcasts well.

2. The system must dispense events within a narrow set of human contexts.

Let’s face it. One of the LMS’ primary value propositions is that of an event dispenser. Folks come to the LMS, find something in the catalog, and register for a self-paced or facilitated event.

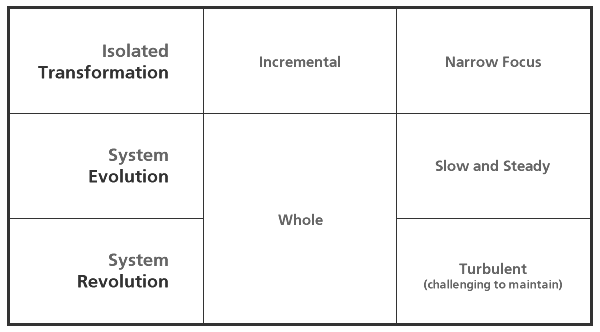

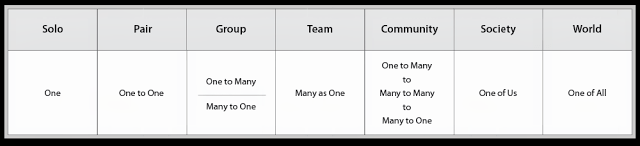

The problem is not that the system functions as an event dispenser. The problem is the classifications of events focus on a very narrow set of human contexts. Most LMS focus on the solo frame and the group (one to many) frame while ignoring the rest of the spectrum.

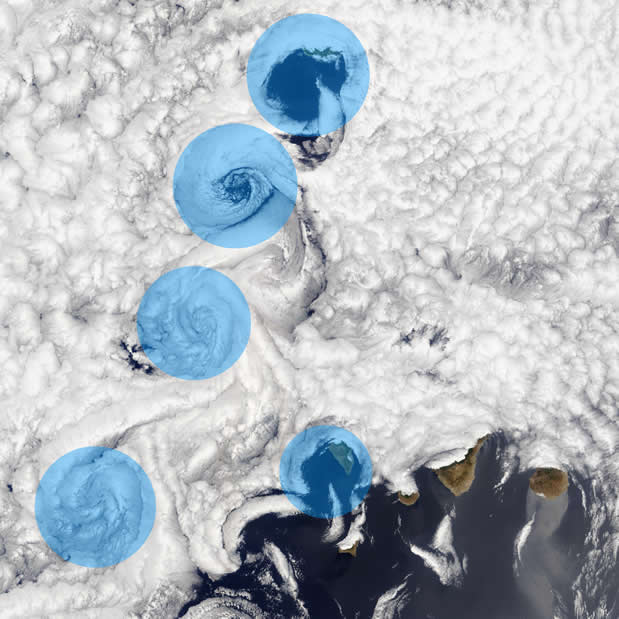

People are often found in pairs, teams, communities, and societies. Funny how we work. A too narrow focus prevents us from connecting people with people where and when they are and in the ways that we tend to gather naturally. While the LMS doesn’t need to provide all of these opportunities, the system with the right design won’t exclude them and, more importantly, won’t prevent smooth flow between them. It’s not about bolting social tools onto an LMS, it’s about being mindful of opportunities and opening system discovery and matching features to take advantage of these contexts.

3. The system must disconnect “Learning” from work

One of the problems I, and many others, have with the “Learning” management system has to do with the lexicon and language used to describe the container system as well as the stuff that it’s supposed to dispense. There is no question that people *can* learn from pathways created within an LMS. However, the events themselves aren’t learning. People learn. Events don’t.

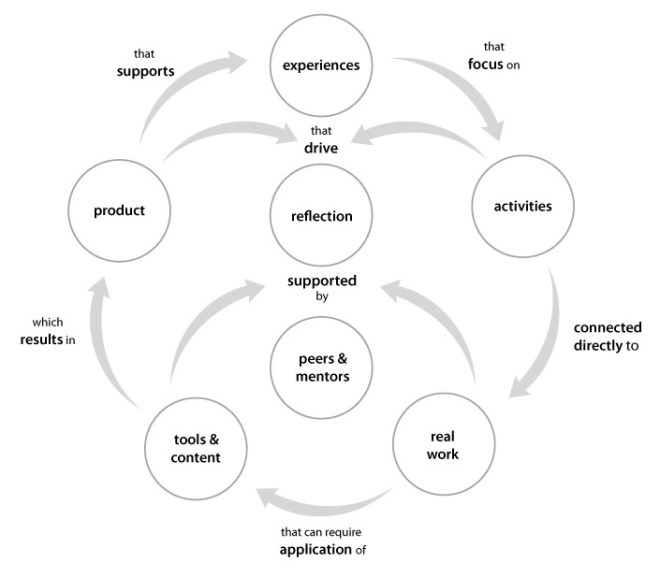

Perhaps the greatest fault of the Learning Management System is the propensity of these fortresses of content solitude to completely isolate learning experiences from work experiences. Whether or not this is intentional, events within the system are typically deployed in ways that remove the participant from work contexts to receive the training experience. While this isn’t always a bad thing, this configuration doesn’t provide for the boundless potential of learning experiences that are connected with a real needs and work challenges. Some of the best learning experiences are directly connected with work. Many of the best work experiences are directly connected with learning.

4. The system must create unpleasant user experiences as a rule.

An LMS content library is a collection of objects. Some of these objects seem to be designed to defecate directly into the soul of the participant. Add this to the catalog link dumping ground problem and usability issues… Horrifying.

5. The tool must be activated as “yet-another-IT-system”, completely independent of similar systems

Large enterprises are full of systems. These separate systems can seem to be in competition for operational territory. Sometimes these systems talk to each other. Sometimes they all use the same authentication pool. Sometimes systems complement the strengths of other systems and bond together to make things easier on the people that use them. Sometimes… they don’t.

6. The system must focus the most energy on the things that mean the least to the people the system is intended to help

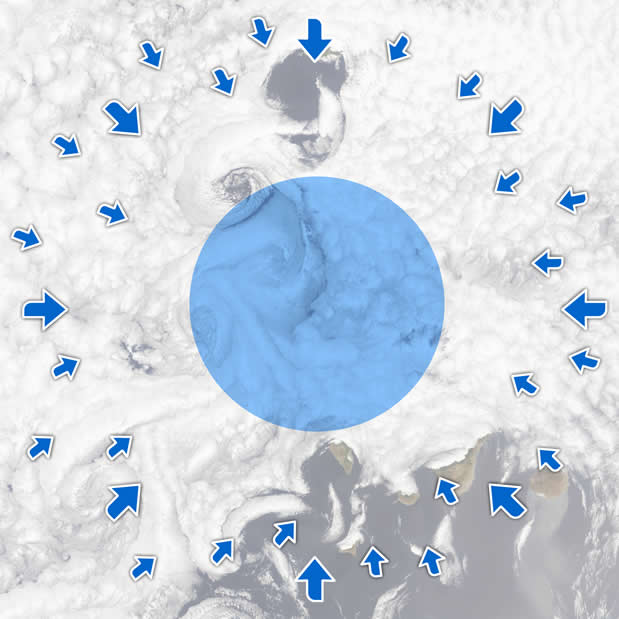

In our attempts to control everything that happens in our organizations, to know who is doing what they have been assigned, and to create reports for reports sake, we create monsters. These monsters focus more on features that feed the panopticon cycle than helping people do what they need to do. Even though I question the usefulness of a CYA reporting mandate in actually affecting behavior, external reporting mandates and other hierarchical reporting requirements are necessary. However, these features shouldn’t be the most prevalent uses and value propositions of the LMS.

…that sounds hopeless…

These characteristics, while common, aren’t rules. They don’t need to apply universally to systems intended to help people. We can do better. The future’s systems, better systems, might not be recognizable (or labeled) as LMS. This would suit me fine. Rather than chase management of learning, maybe systems of the future will focus on Work and Learning Support, with an emphasis on work and support.

This is the vision I’m pushing for in my organization and I think we’ll get there. In my view, the first step is dropping the ideas of management and learning exclusively as a packaged event. Big change. Big promise. Worth changing our collective mindset about LMS.

Image Credit

Image Credit